Howdy! Sorry for the long delay since Episode 3, but I have a good excuse: I was busy helping a future starship captain make her way into the world (ain’t she somethin’?).

Today we are going to dive right back into the thick of it, with a topic that’s at the center of everything: the relational database, and how it’s kind of like a magician.

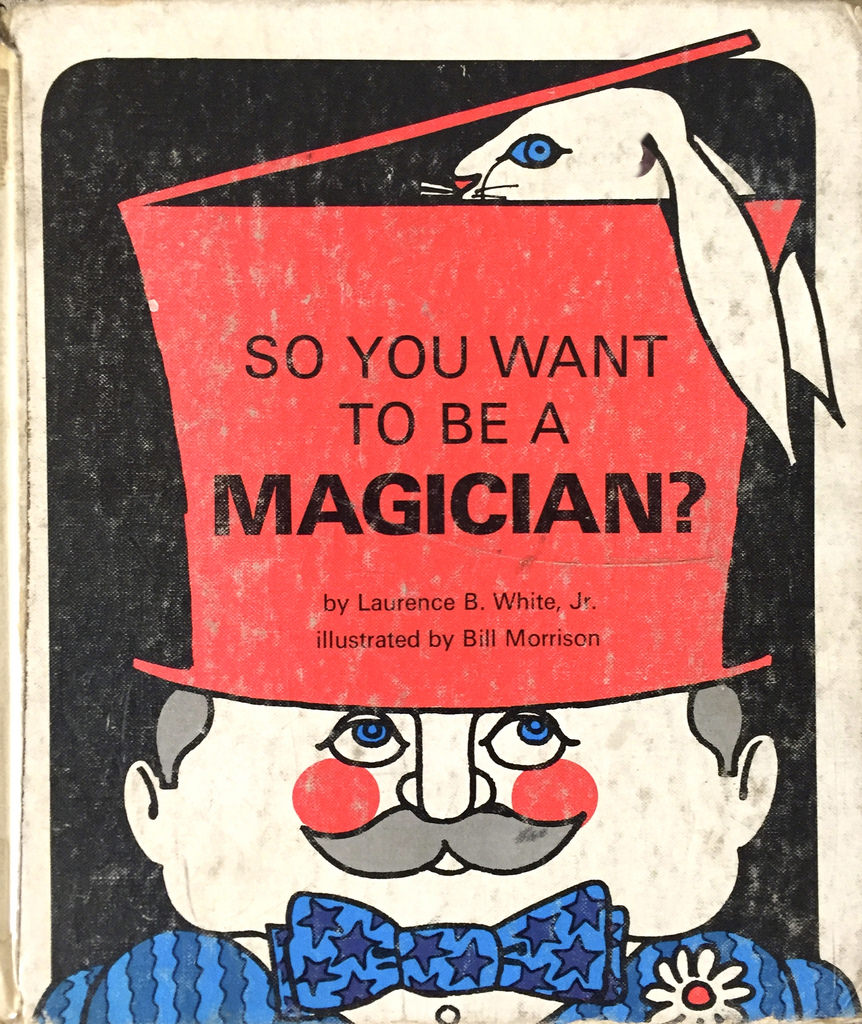

When I was a kid, I once went to see a magic show — you know, the kind with rabbits being pulled from hats. I was so inspired that I immediately got this book out of the library called “So You Want To Be A Magician?”, and started reading.

To my dismay, I quickly learned that magic wasn’t “real” … it was just sleight of hand. (Don’t worry, I still believe in real magic). Magic seems to break the laws of physics, but it’s an illusion, exploiting the quirks of human perception. Of course, this doesn’t really matter to the audience: we all know it’s an illusion, but it still delights us.

In technology, many things behave like that. We know that behind the scenes, it’s zillions of 1s and 0s racing along, but when the end result is mapping the human genome or landing astronauts on the moon, it’s hard to describe that as anything other than magic. The basic function of computers, as far as I care, is to make magic real enough, so we can get what we want — whether that’s for business, or for humanitarian things like helping homeless families find housing, or teaching girls to code. Technologies are best when they get out of your way and let you do meaningful things in the real world.

Of all of the technologies I’ve worked with, relational databases are one of the few that give me that magic feeling every time. Why are they so special? Because they decouple “what you want” from “how you get it”.

To see why that matters, let’s take a quick trip down memory lane, for perspective.

In the 1960s, computer programs stored their data in files or hierarchical databases. This meant that the physical (on-disk) location and arrangement of data was always foremost in mind when writing programs. And, it meant that you pretty much had to know exactly how you wanted to use that data, right from the start. (Which, usually, you don’t.)

What relational databases gave us was a nice bit of abstraction: using some set theory and graph theory, Edgar Codd revolutionized the world of data by saying that the logical structure of the data (as described in entities and attributes) could be independent of the physical structure (as needed for storage and retrieval). So your program can mirror the way you think about things in the real world (say, “families” and “housing options”), rather than solely in terms of how the bits are stored or combined by the machine (say, “files” and “records”). So instead of this (pseudocode):

Open //data/hfc/schema/family_need_dates as fnd_extents

Scan fnd_extents for range ‘P-Q’

Load as fnd_extent

Open //data/hfc/schema/open_dates as od_extents

Scan od_extents for range ‘2016–09–2016–10’

Load as od_extent

For each record f in fnd_extent {

For each record d in ond_extent {

If f.family_name = ‘Smith’ and d.date = ‘2016–09–01’ {

Add f.family_name, od.location to result

}

}

}

Close dates_extent

Close families_extent

You can instead just say:

select fd.family_name, od.location

from family_need_dates fd, open_dates od

where

fd.date = od.date

and last_name = ‘Smith’

and od.date = ‘2016–09–01’

You’re not telling the database how to do its business; you’re just declaring what you want, and all the details are hidden from you. Any way you want to query those entities is possible, without regard to how the data is physically stored.

Of course, the data is still physically stored in a particular way (it’s still ones and zeros on disk, after all). But in the relational model (and its most common access language, SQL), the database takes care of the hard parts for you, using clever algorithms for query plan optimization, caching, and indexing so that the illusion is maintained: you just ask for what you want, and the database hands it to you. It’s what has made relational databases the dominant form of storage for decades, and it still never ceases to amaze me. (If you still don’t quite get why this is so neat, I’d recommend this presentation I did a couple years ago at South By Southwest Interactive.)

So, flash forward to 1999, when Salesforce was founded. The Salesforce engineering OGs (Parker Harris and Dave Moellenhoff) were already very familiar with relational databases, because that’s how all the on-premise software of the day worked. But they took it one step further: since they were running a single instance of Salesforce’s software in the cloud, backed by a single relational database, they needed to make one more leap: each tenant needed the ability to define their own data entities, with a totally isolated view of their own world.

Thus was born the Salesforce multitenant platform architecture (which is extensively described in this 2009 SIGMOD paper; an updated version lives in this article by Salesforce architect Steve Bobrowski, who co-authored the original SIGMOD paper). It’s worth reading in full, but the key point, for our purposes here, is that the underlying essence of this architecture is still the relational database. That core illusion — that the data is just “out there” and you can ask any question you want — is the same.

The Salesforce data model isn’t a standard (ANSI-compliant) relational database model. The query language (SOQL, the Salesforce Object Query Language) makes a number of modifications away from SQL, mostly to simplify things for less technical users, and to make things work well in a shared environment. (For example, certain columns like “modification date” are automatically present on all objects; and there are a fixed set of relationship types you can use to connect entities, which control things like deletion behavior). But the basic premise of declarative, logical access to entities — that’s the same.

And the promise of doing this as a service (rather than rolling your own database) is: it all just works, whether you’re a technical user or not. It’s a bold promise to make, and our job in Salesforce engineering is to make sure it’s true for our customers, every day.

Now, then. All of this talk about “magic” is making my unicorn detector go crazy. It sounds too good to be true! Is it?

In a sense, yes. Something I didn’t point out too explicitly above is: from a user’s perspective, when you’re using Salesforce’s shared relational database, scalability isn’t typically something you have to worry about. In most cases, you’d write the same code for 5 users that you would for 500 or 5 million. The platform makes it happen transparently.

But: it turns out that in the real world, actually maintaining this level of usability is a lot of work. Just like a magician has to practice an insane number of hours before sawing her assistant in half on stage, running a service that provides relational database capabilities — at the rate of several billion requests per day — is no parlor trick.

So, let’s talk about a couple ways in which this particular database-centric architecture dictates the day-to-day scaling challenges we face, behind the scenes.

Query Planning. So first off, an important thing to recognize about what I’ve said above is that Salesforce’s database architecture is not just one magic trick, it’s two, stacked on top of each other. Yes, we run a large physical relational database, which provides an abstraction over the storage hardware. But we also run a large multi-tenant software application over the physical relational database, which provides another layer of abstraction. This is how we can have such an open-ended platform, where each tenant can create their own data shapes. So when you create, say, a “volunteer opening” object in Salesforce, it doesn’t actually create a “volunteer opening” table in the underlying relational database; it stores metadata mappings between your logical schema and the physical representation — which is a small set of general purpose tables that map attributes in your schema (like “shift.start_time”) to generic positional columns in the underlying tables (like “custom_data.column_34”), along with type information, relationships, etc.

So in other words, we have even more work to do when it comes to executing queries efficiently than a relational database does out of the box. Usually, a database will handle query planning on its own; it keeps detailed statistics on the sizes and makeup of individual tables, so when it has to execute something expensive (like a JOIN of two tables) it can do so in the most efficient way possible. But because we stack that second layer of abstraction atop the first, all of this goes right out the window. All the queries we run use statistics that we gather and maintain ourselves, using physical query plans we specify exactly, using extensive SQL query hints.

If that sounds hard, then … you’re right, it’s hard. We have teams of engineers who live and breathe relational database query plan optimization techniques. (Fun folks to have lunch with, let me tell you … if you, uh, like that kind of thing. Which I do.)

Workload Tuning. As I alluded to above, the fact that Salesforce is an open-ended platform means that people build all kinds of things on it — really, anything that helps them connect to their customers in a whole new way. Marketing, customer support, financials, health care, higher education — you name it. So that means that there is a very wide range of behavior types. Some customers use a lot of small, short queries to write and read individual records, or stream individual record changes out to integrated systems. Some customers use a lot of heavy-duty reports to aggregate and summarize their data, or export it to Wave (the Analytics Cloud). Some do both. In database terms, this is what you might call a “mixed OLTP / OLAP workload” (OLTP = “online transaction processing” and OLAP = “online analytical processing”).

Remember, though that this is all happening concurrently on the same database. And it turns out that underneath it all, the magician is still beholden to the laws of physics; CPUs and disks are fast, but they’re not infinitely fast. So tuning the work that our customers do is a big part of our operations.

Here’s an example. Let’s say you have an object in Salesforce that contains a hundred records (let’s call it “appliance”). And then, let’s say you have another object that contains a hundred million records (let’s call it “sensor_reading”). If you create a report or SOQL statement that connects these two things, it’s pretty important how that query gets physically executed. If it has to scan a hundred million records every time you run it, that’s going to use a lot of resources, and take a (relatively) long time. If instead you can just scan 100 records and then do fast lookups into the hundred million records, it’ll be cheap and nearly instantaneous.

And not only does that difference affect the individual customer who’s running the query, but — surprise — it affects everyone else, too. Not all that much, mind you (the pool of resources is very big, after all), but a little bit. And, when you add that up across the whole customer base, it has the potential to wreak havoc on performance and scalability.

There are a few things we do for this. Obviously, we do our darndest to make sure the magic “just works”; if there are invisible tricks and optimizations we can do across the board, that’s where we start. But sometimes, what a customer query is asking the system to do is weird, or unexpectedly expensive, and we have to respond (fast!).

There are two ways we do this: through proactive monitoring, and through limits.

Proactive monitoring uses the sizeable infrastructure we have for system visibility (using Apache Kafka, as we’ve blogged about previously) to quickly discover when something is going off the rails. Our engineering teams keep a close eye on system health, and can quickly determine if usage by one customer is driving an inordinate amount of load. We can then either reach out to that customer, or temporarily throttle the speed of those transactions until we get things under control. (We only do this in dire circumstances, of course.)

The other approach is using pre-set limits, on things like number of API queries, or the number of attributes in a single object. This is more of a “failsafe” mechanism, based on our historical analysis of what usage patterns might cause problems. Like any heuristic, they aren’t perfect; they don’t catch every problem, and sometimes they stop things that wouldn’t have been a problem. But, on the whole they’re useful.

There are plenty of other feats of alchemy that our engineering teams pull off as a part of running our high-scale relational databases, and we’ll talk about more of them in future episodes (like transactionality, access control, massive physical data storage, and more). But hopefully I’ve impressed on you the key point: that the unique character of the relational database has advanced the state of the industry (in general, and at Salesforce) by providing a strong abstraction between “what you want”, and “how you get it”.

IMO, providing that—reliably at scale—is the biggest rabbit you could pull out of your hat.

Thanks to Shane Sigler, Steve Bobrowski, and Paul Constantinides for mucho input into this post.

Wanna read more from The Architecture Files? Continue on to episode #5.